Do Smartphone Apps Help Your Mental Health?

Can smartphones help your mental health? Goldberg et al (2022) recently conducted a study to see whether mobile smartphone apps can help your mental health. The researchers’ conclusion?

“Taken together, these results suggest that mobile phone-based interventions may hold promise for modestly reducing common psychological symptoms (e.g., depression, anxiety), although effect sizes are generally small (Ed. — not true) and rarely do these interventions outperform other interventions intended to be therapeutic (i.e., specific active controls).”

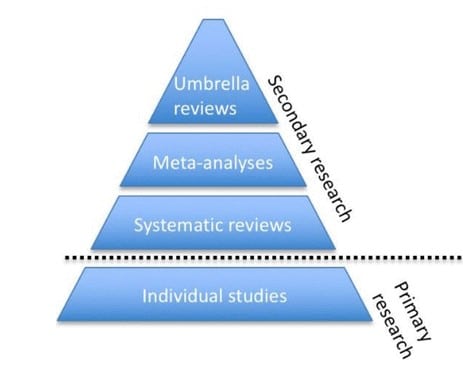

They conducted what is called a scientific “meta review” (also called an umbrella review or a systematic review of meta analyses). A meta review seeks to review the existing research literature, but only for certain studies — specifically meta-analyses. Meta-analyses are scientific reviews of individual experimental studies that look at the effects of those studies when combined with similar studies.

A meta review looks at a set of meta-analyses studies in order to try and formulate an even more generalized picture of the research literature.

What Data You Review is Important: Selection Bias

Every battle is won or lost before it is ever fought, says Sun Tzu. Nowhere is that more true than in scientific research — especially meta-analyses. A team of researchers can influence their results by limiting the data or the studies they choose to examine.

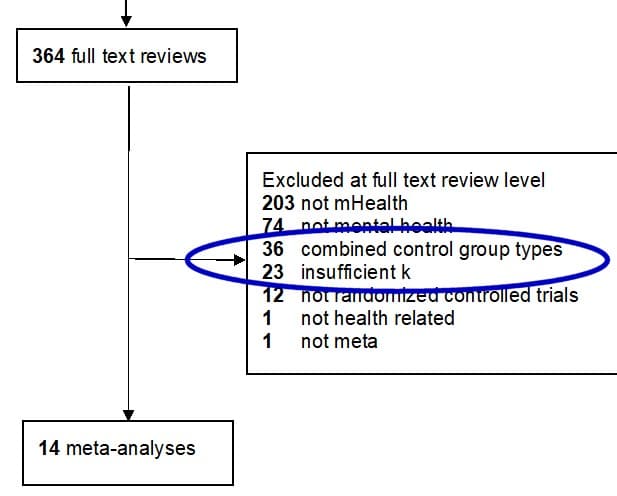

In Goldberg et al.’s (2022) case, they examined only 14 studies. They excluded a whopping 59 studies they could’ve looked at, but chose not to. These 59 studies likely would’ve changed the researchers’ findings (but who knows in what way?).

It’s a red flag to me when researchers exclude the majority of the studies they could’ve look at but didn’t. It suggests to me an active effort to bias the results. Researchers always stand behind their inclusion/exclusion criteria by suggesting they only want the most rigorous research to be included. But those criteria need to make sense and be justified. So let’s take a look at their justifications.

Arbitrary Number of Studies in the Meta-analysis

Twenty-three of the studies were excluded because the researchers had set of their inclusion criteria to only look at meta-analyses that examined at least four (4) randomized controlled trials. Why four? The researchers give no explanation for this arbitrary number, but do provide a research citation to an article entitled, Conducting quantitative synthesis when comparing medical interventions: AHRQ and the Effective Health Care Program.

The article’s focus is on defining what makes a good comparative effectiveness review, one part of which typically includes meta-analyses. The authors note that when looking at meta-regression (not the same as meta-analysis, by the way) in the research literature, the gold standard of examining research findings, the Cochrane handbook, suggests a minimum of 10 studies needed. The article then notes that the Cochrane handbook provided no justification for that number. Citing the non-justified Cochrane number, the same article then arbitrarily picks the number 4 as the minimum number of studies needed. Do you see the problem here yet?

There’s no scientific evidence suggesting that four is the “right” number, or even a good number. Consider a meta-analysis that looks at just two randomized controlled trials, each with over 1,000 subjects vs a meta-analysis that looks at four randomized controlled trials, but the studies examined employed less than 100 subjects each. One meta-analysis is looking at 2,000 points of data, while the second is looking at 400 points of data.

In Goldberg’s study, the larger group of subjects is ignored because the researchers justified their cutoff minimum number of studies based upon an arbitrary number found in another article, that itself was an arbitrary number. Twenty-three of the 35 studies were not even looked at for this reason. Other research articles cited by the authors didn’t employ this cutoff in their research design or recommendations (Dragioti et al., 2019; Cuijpers, 2021; Lecomte et al., 2020; Fusar-Poli & Radua, 2018).

A more thoughtful design maybe would’ve look at the total number of subjects that each meta-analysis covered, and then set a cut-off based upon that number. But honestly, if a meta-analysis is well-designed, why bother with any cutoff?

You Apparently Can’t Combine Control Groups Any More

Goldberg et al. (2022) also seemed to have a problem with randomized controlled studies that didn’t separate out control groups, those who were receiving an “active” control intervention vs an “inactive” one. They excluded an astounding 36 studies due to this inclusion condition.

I get why the researchers went down this road — they wanted only certain studies in order to tease out the effects of active attention in an app vs. the app’s active treatment techniques. It’s a nuanced way of looking at this issue.

But the truth is that most psychotherapy and medical randomized controlled trials research don’t differentiate between these two types of control groups. The current researchers are (and acknowledge as much), in effect, creating a different gold standard of rigorousness for smartphone apps that most randomized controlled trials conducted in psychotherapy wouldn’t meet.

If that’s the gold standard researchers are going to hold smartphone apps to, then by all means, let’s go and take a look at all the past psychotherapy outcome research to see if any of it can meet the same criteria. Chances are, I think we’d find it wouldn’t hold up nearly as well (e.g., Michopoulos et al., 2021 showing that the control group chosen definitely impacts effect estimates in psychotherapy for depression).

What Does This Mean in Context?

I get that researchers need to make these kinds of decisions about their inclusion criteria. But the more you exclude studies that you’re going to examine, the less diverse the underlying data pool is. Instead of just 14 studies, these researchers could’ve examined data from 73 studies. Such a diverse pool would likely lead to more generalizable results as the subject pool would’ve been larger, and by definition, less homogeneous.

Effect sizes are how medical and psychology researchers determine whether an intervention or treatment has a small, medium, or large impact on a person’s desired outcome (like a reduction in number or severity of symptoms). Some have argued that the effect sizes found in psychotherapy research — the impact active treatment has on a person’s symptoms — are in the 0.5 to 0.8 range (a moderate to large effect).

But more recent research (e.g., Schafer & Schwarz, 2019; Bronswiijk et al., 2019) demonstrate that effect sizes in psychological research have been inflated due to “publication bias and questionable research practices:”

From past publications without pre-registration, 900 effects were randomly drawn and compared with 93 effects from publications with pre-registration, revealing a large difference: Effects from the former (median r = 0.36) were much larger than effects from the latter (median r = 0.16).

In other words, effect sizes were halved when these biases were removed from the research. This suggests that psychotherapy is likely to have an actual effect size of between 0.25 and 0.40 (which is exactly what Bronswijk et al., 2019 and others have found). Keep in mind, too, that most of this research doesn’t differentiate between an active or inactive control group — the new gold standard the current researchers seemed to be demanding of apps.

The majority of the 14 meta-analyses of apps’ effectiveness the current researchers looked at had effect sizes ranging from 0.25 to 0.47, with most in the 0.30 to 0.47 range. Despite the researchers’ conclusions, that actually suggests apps have a pretty good impact on people’s feelings of anxiety, depression, smoking, and stress. These effect sizes are consistent with the likely actual effect sizes of active psychotherapy treatments.

Summary

That’s the takeaway here. The researchers found that, despite their rigorous exclusion of the majority of the studies in this area, apps proved to have effect sizes between the small and medium range (not all small, as the researchers claim). These effect sizes are consistent with effect sizes found elsewhere in the psychological literature for therapeutic interventions.

Or as the researchers say:

Taken together, results support the potential of mobile phone-based interventions and highlight key directions to guide providers, policy makers, clinical trialists, and meta-analysts working in this area.

Research will continue to be conducted in this area and it’s likely the overwhelming number of studies will call for a future meta review to help add to our understanding and knowledge in this area.

References

Bronswijk et al., (2019). Effectiveness of psychotherapy for treatment-resistant depression: a meta-analysis and meta-regression. Psychological Medicine.

Cuijpers, P. (2021). Systematic Reviews and Meta-analytic Methods in Clinical Psychology. Reference Module in Neuroscience and Biobehavioral Psychology.

Dragioti et al., (2019). Association of Antidepressant Use With Adverse Health Outcomes: A Systematic Umbrella Review. JAMA Psychiatry.

Fu R, Gartlehner G, Grant M, Shamliyan T, Sedrakyan A, Wilt TJ, et al. (2011). Conducting quantitative synthesis when comparing medical interventions: AHRQ and the Effective Health Care Program. J Clin Epidemiol.

Fusar-Poli P, Radua J. Ten simple rules for conducting umbrella reviews. Evid Based Ment Health. 2018;21(3):95–100. pmid:30006442

Golderberg et al. (2022). Mobile phone-based interventions for mental health: A systematic meta-review of 14 meta-analyses of randomized controlled trials. PLOS Digital Health.

Lecomte et al. (2020). Mobile Apps for Mental Health Issues: Meta-Review of Meta-Analyses. JMIR mHealth and uHealth.

Michopoloulos et al. (2021). Different control conditions can produce different effect estimates in psychotherapy trials for depression. Journal of Clinical Epidemiology.

Schafer, T. & Schwarz, M.A. (2019). The Meaningfulness of Effect Sizes in Psychological Research: Differences Between Sub-Disciplines and the Impact of Potential Biases. Frontiers in Psychology.